The Evolution of Fraudulent Schemes

Increased Misuse and Sophistication of AI to Commit Digital Fraud

The level of sophistication used by fraudsters continues to increase. Fraudsters are using voice recognition and voice swapping, as well as other generative AI tools that continue to evolve. It is critical to constantly reassess internal controls and understand how AI is used and misused. In this article, the author shares some of the schemes used and issues a challenge to entities as well as to accounting and fraud examiners.

At this point, the idea of artificial intelligence (AI) generating content is nothing new. You have likely seen plenty of videos and pictures of AI-generated content on Facebook, YouTube, etc. When I started seeing this generative AI content, I was impressed, but the content was generally easy to spot and dismiss as fake. As easy as it was to spot those fake photos and videos, I had two thoughts at the time:

- “These generative AI tools are only going to get better as they train on more data,” and

- “Like any emerging technology, someone out there is going to use this as a vehicle to commit fraud.”

Based on those two trains of thought, I considered the implications of generative AI in the forensic accounting space, and I became increasingly concerned. As forensic accountants, we all learn about the three main ingredients of a fraud scheme: pressure (outside force pushing someone to commit fraud), rationalization (what someone tells themselves to make fraud an acceptable course of action), and opportunity (a breakdown in internal controls or other circumstances that allows fraud to occur).

Generative AI probably has not moved the needle up or down for the pressure, or rationalization components, but opportunity? That has absolutely skyrocketed.

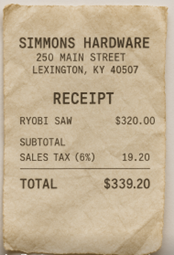

All you must do to understand why generative AI has so greatly increased the opportunity to commit fraud is to take a moment and consider the barrier to entry for creating fraudulent documents. In the past, to create a forged document, like an invoice or receipt, the fraudster only needed a decent bit of expertise with software like Photoshop and Acrobat. Those software tools cost money, and there is a time investment needed to learn how to use them to forge documents that can be used to conceal fraud. For someone who is considering their opportunity to commit fraud, that small amount of work may be enough to deter their efforts. Consider, however, that I can now go into ChatGPT and with a few prompts, a little tweaking, and 5–10 minutes, create a realistic, fake receipt.

It is not perfect, it certainly lacks detail (no date, no “thank you” message, etc.) and there are issues with the folds in the paper not carrying over to the text on the image, but would it get by a quick review on an expense report? It would not surprise me at all if it did, and there are individuals out there that have created much more realistic examples. In my career as a forensic accountant, I have seen that abuse of employee reimbursement processes is one of the most prevalent forms of occupational fraud, especially within smaller, family-owned businesses with limited internal controls in place. These businesses often rely on manual review of documentation by a single employee. The days of relying solely on manual document review at any level are likely at an end.

It is not perfect, it certainly lacks detail (no date, no “thank you” message, etc.) and there are issues with the folds in the paper not carrying over to the text on the image, but would it get by a quick review on an expense report? It would not surprise me at all if it did, and there are individuals out there that have created much more realistic examples. In my career as a forensic accountant, I have seen that abuse of employee reimbursement processes is one of the most prevalent forms of occupational fraud, especially within smaller, family-owned businesses with limited internal controls in place. These businesses often rely on manual review of documentation by a single employee. The days of relying solely on manual document review at any level are likely at an end.

If that is not concerning enough, just take this to its logical extent. If I can use AI tools to create forged receipts, could I create fake invoices from a shell company for a billing scheme? Could I create purchase orders and bills of lading to conceal the misappropriation of inventory? Could I create a fake ID and employment documentation to add a ghost employee to a payroll register? Fake bank statements to “validate” transfers to a personal account? The answer to all the above is probably, yes.

According to the ACFE’s Occupational Fraud 2024: A Report to the Nations, in 41% of cases reviewed, fraudsters created fraudulent physical documents to conceal their fraud scheme. According to the same report, 37% of cases involved altered physical documents, 31% involved the creation of fraudulent electronic documents/files, and 28% involved the alteration of electronic documents/files. Given the data, it appears that the forgery of documents is the most prevalent strategy for fraudsters to conceal their schemes.

Realistic deepfake documents like invoices, tax returns, and government-issued ID are not nearly as simple to create as the receipt that I made using ChatGPT, but that is likely because the most common AI tools simply are not trained on those types of documents. The enterprising young fraudsters of the world, however, are certainly capable of training their own models on just this type of forgery. Data from Entrust Cybersecurity Institute indicates that in 2024, for the first time, the number of digital document forgeries surpassed the number of physical counterfeits. Entrust’s 2025 Identity Fraud Report identifies generative AI as a key tool that has allowed for this shift.

Occupational fraud is not the only threat that has been greatly enhanced by generative AI either. There has been a rash of scams targeting businesses from external parties that have been having a field day with generative AI. These threats of external fraud add an additional layer of concern due to the broad target demographic. Individuals, businesses, and governmental entities are all susceptible to these attacks.

The same impact generative AI has on efficiency in a professional setting can also be utilized by scammers to increase the volume and believability of phishing messages that they can generate. The language translation and writing capabilities of a tool like ChatGPT can greatly reduce the amount of time needed for a scammer to create a convincing phishing message. Phishing e-mails can also be made even more realistic by training AI tools on real marketing messages. Fraudsters can even utilize AI-powered chatbots to respond, in real-time, to a target to create a more convincing exchange. There are varying estimates on the overall impact of generative AI on phishing attacks, but some estimates indicate a 4,000% increase since the launch of ChatGPT in 2022.

External attacks from fraudsters can also utilize deep-fake technology to target executive-level employees. Generative AI voice-cloning tools simply need a recording of 15 seconds or less to create a realistic clone of an individual’s voice. The cloned voice can then be used on a call with another employee of the target company to “say” anything that the fraudster wants. This technology has already been widely used to clone the voices of public-facing figures, such as CEOs of large companies. In 2024 Ferrari was a target of one such attack. In that instance, the employee that was targeted took the correct steps to try to verify that the caller was who they purported to be. When the caller could not answer questions that were specific to the Ferrari CEO, they immediately disconnected.

A cloned voice can also be combined with face-swapping technology to create realistic deepfake videos and even a live video-call can be a deepfake impersonation. This happened in February of 2024 when a finance worker in Hong-Kong was convinced to send $25 million to fraudsters posing as the employee’s CFO over a video-call. With the rate of improvement in the AI tools that are currently out there, this type of scheme will only become more prevalent.

According to a predictive study by Deloitte, fraud losses could reach as high as $40 billion by 2027, compared to $12.3 billion in 2023, due in large part to the use of generative AI tools. The opportunity to commit fraud has been transformed by the advancements in generative AI and forensic accountants must apprise themselves of the ways in which it can be utilized by fraudsters.

While the advancement of generative AI leads to additional threat vectors, it also gives us forensic accountants the opportunity to encourage our clients to reassess their internal control framework. The tried-and-true internal controls that we promote should still be the first line of defense when responding to the elevated occupational fraud risk posed by generative AI. Robust red-flag reporting systems, proper segregation of duties, and employee background checks are all controls that must be in place to prevent occupational fraud. In addition, we need to ask ourselves, which internal control procedures are particularly vulnerable to AI-powered occupational fraud? The answer will vary based on the operational structure of the company, but it will also allow us to recommend additional preventative and detective controls.

When examining an electronic document, there are a few key indicators that it has been generated by an AI tool. Misspellings, blurred text, or random characters can all be indicators. Most AI tools are effective at generating an approximation of what you ask for, but they often have a hard time overlaying realistic text on that image. If the file appears to be a picture of a receipt, a closer look at the “paper” can show inconsistencies in shadows, and in the angle of the paper compared to the text printed on said paper. Finally, you can work with an IT professional to examine the metadata of the file, as there will often be an indication that the document was created using a generative AI tool.

The same tools used by the fraudsters are also being used to develop novel detective controls. Currently, AI detectors are mainly limited to identifying text based generative AI content, but we expect more robust AI-powered transaction monitoring, and deep-fake document detection tools will begin to trickle down from the large financial institutions over the coming years. For now, though, educating ourselves and our clients on the fraud risk posed by generative AI is one of the most important steps we can take.

The generative AI boom that we are living through has created an all-time low barrier to entry for fraud. Fraud schemes that once required extensive time and monetary investment to conceal have now become accessible with nothing but an internet connection and a few clicks. We cannot control how fraudsters use generative AI, but we can help our clients stay one-step ahead by keeping them up to date on the associated risks. If you have been lagging on your understanding of generative AI, now is the time to get caught up. AI-enabled fraud is no longer a threat that is on the horizon, it is here, and likely here to stay.

Nathan Simmons, CPA, CFF, CFE, is a forensic and valuation services consultant with Dean Dorton. He obtained his Bachelor and Master of Science in Accounting degrees from the University of Kentucky. He has been a part of Dean Dorton’s Forensic Accounting team since joining the firm in 2021. Mr. Simmons is a forensic accounting consultant specializing in litigation support, fraud prevention, detection and deterrence, and internal control assessment. He is a CPA, with a specialized Certification in Financial Forensics from the AICPA as well as a Certified Fraud Examiner through the ACFE.

Mr. Simmons may be contacted at (859) 425-7681 or by e-mail to nsimmons@deandorton.com.